In the Data Intelligence Hub environment, security isn't just a technical requirement, it’s a prerequisite for data sovereignty. When building on the Build & Operate platform, managing who can access your data assets must be automated, auditable, and resilient.

Manual firewall changes and ticket-based approval do not scale in sovereign, multi-tenant data platforms.

This article explores how we use firewall management by using Kubernetes Custom Resource Definitions (CRDs) to build a self-service Network Access Policy engine.

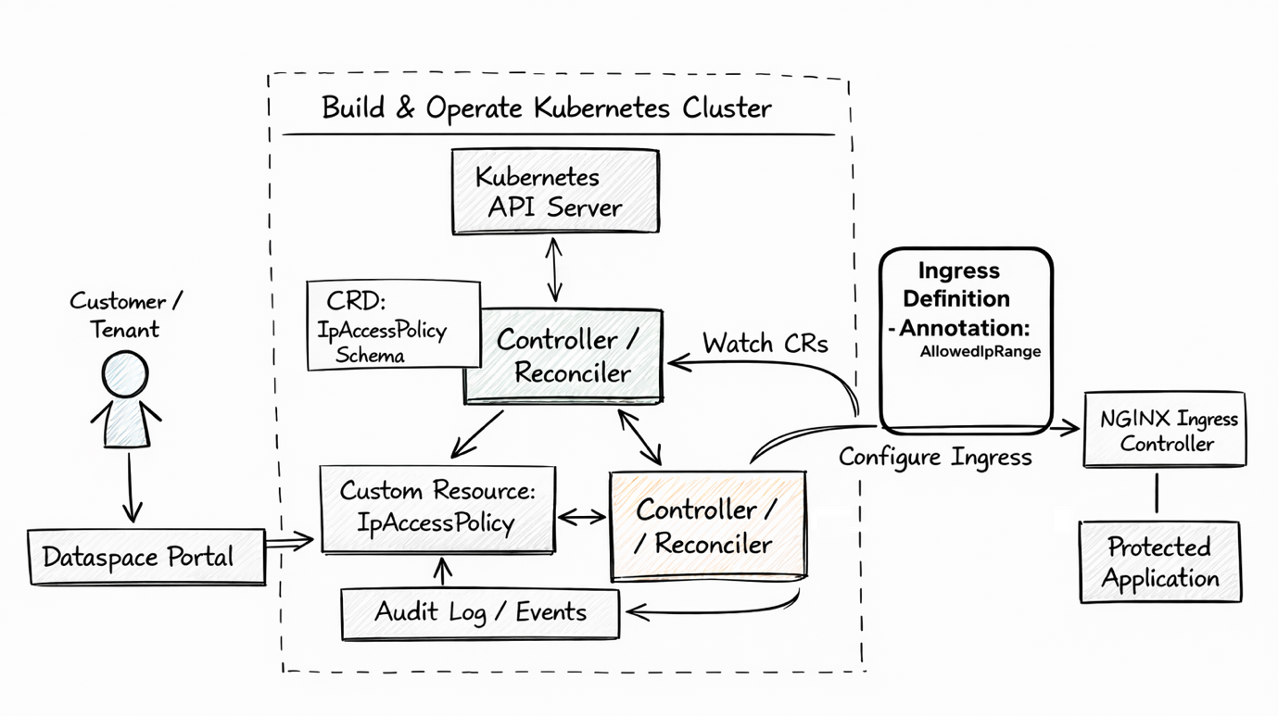

Figure 1. High-level architecture for declarative network access control using Kubernetes CRDs

Architectural vision: Security as code

In a traditional setup, changing an allowed IP address involves manual tickets or proprietary API calls. In the Build & Operate ecosystem, we treat access as a "Native Kubernetes Resource".

- Dataspace portal: A customer-facing dashboard where customers declare, add, and remove allowed IP (CIDR) ranges for network access

- Custom resource (IpAccessPolicy): A Kubernetes object that stores the desired state of network permissions, defined and validated by a CRD

- Controller: A background process that watches these policies and configures the underlying infrastructure in real-time

Defining the "Access policy" contract

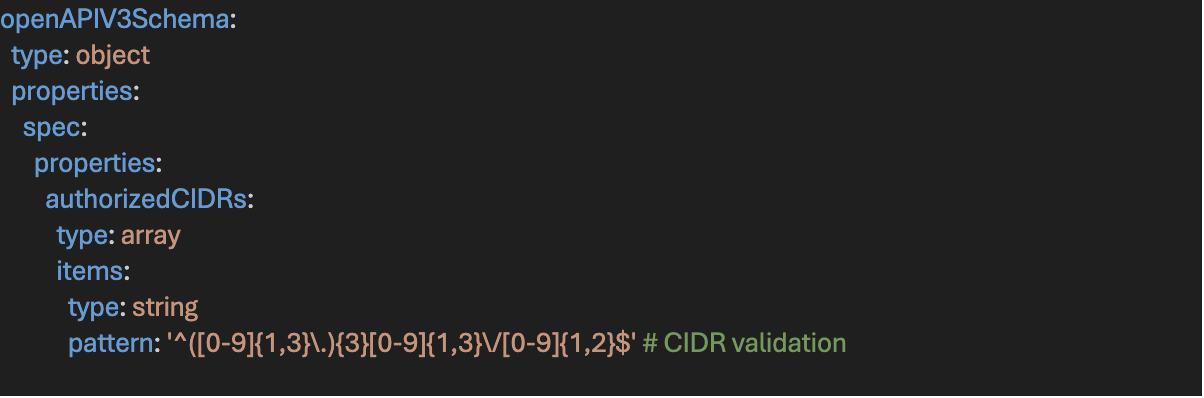

Using a CRD allows us to define exactly what an access request looks like. By defining an OpenAPI v3 schema, the Kubernetes API acts as a gatekeeper. If a user tries to enter an invalid IP (e.g., 999.9.9.9), the system rejects it immediately long before it reaches your cloud firewall.

In our Data Intelligence Hub implementation, the policy includes:

- authorizedCIDRs: The specific network ranges granted access.

- clusterId: The target environment where the rules must be applied.

- status fields: Real-time feedback showing if the policy is Pending, Active, or Failed.

To understand how this works in practice, we look at the relationship between the Custom Resource (the customer’s intent) and the Ingress (the actual enforcement).

The customer’s intent (Custom resource)

Declaring access via dataspace portal

From the user’s perspective, network access is managed through the Data Intelligence Hub portal.

When a customer adds or removes an IP address, the platform translates this action into a Kubernetes IpAccessPolicy custom resource. The backend service converts this request into an IpAccessPolicy custom resource.

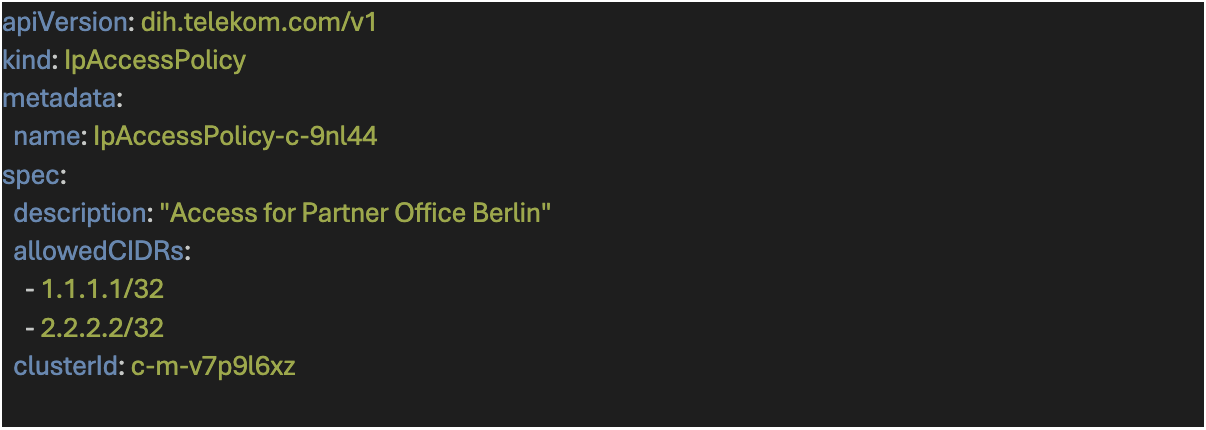

The Generated Custom Resource

This YAML is intentionally simple and focuses only on business intent. When a customer enters data into the Data Intelligence Hub portal, the backend generates a IpAccessPolicy. This YAML is clean and focuses only on business logic:

This resource represents the single source of truth for network access.

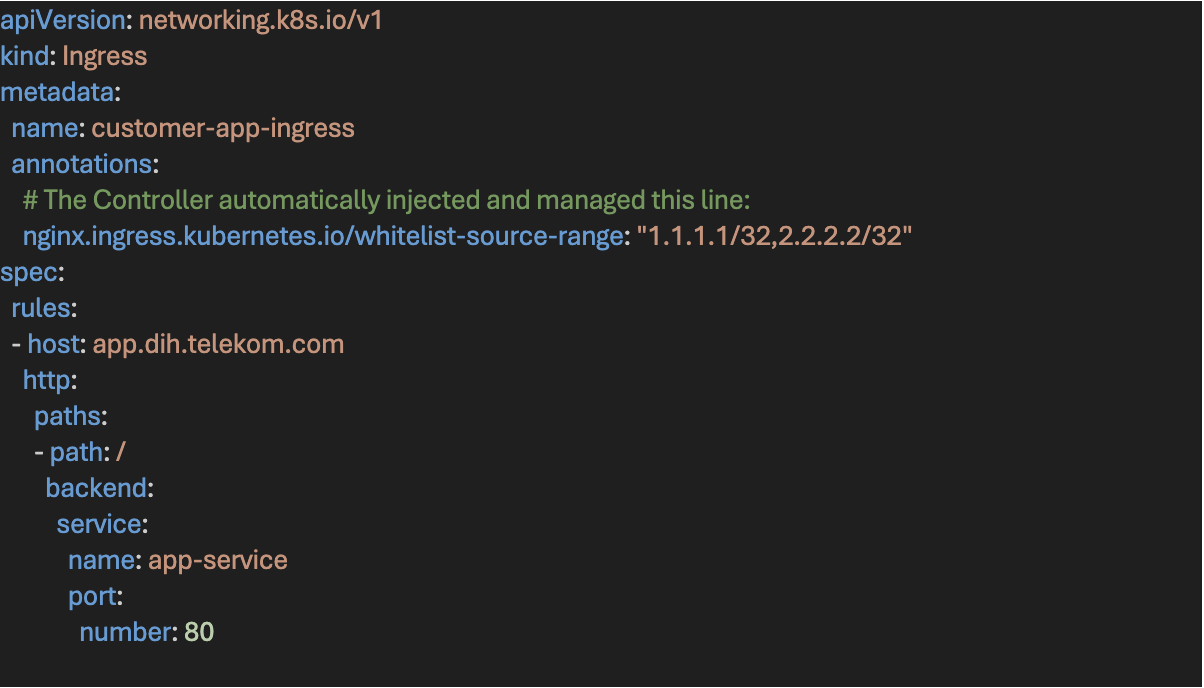

The technical enforcement (NGINX Ingress)

The controller watches IpAccessPolicy resources for changes. When it detects an update, it automatically locates the corresponding Kubernetes Ingress and patches its metadata.

The user never touches this technical configuration, enforcement is fully automated.

This ensures access control is enforced directly at the Kubernetes ingress level.

The mechanic: The reconciliation loop

The power of this system lies in the Reconciliation Loop. Unlike a one-time script, the Controller (Reconciler) runs continuously, following the Observe-Diff-Act pattern.

- Observe: The controller watches the Kubernetes API for new or updated IpAccessPolicy objects.

- Diff: It compares the "Desired State" in the CRD against the "Actual State" of the network (e.g., Cloud security groups or NGINX Ingress annotations).

- Act: If a discrepancy is found, the controller executes the necessary API calls to align the two states.

- Verify: It updates the resource status so the UI can show the user a green "Success" checkmark.

The schema and controller logic

The Schema (The Gatekeeper)

To ensure the stability of the Build & Operate platform, the CRD defines a strict schema. Below is a snippet of the validation logic that prevents malformed data.

Example schema validation for CIDR inputs:

This validation ensures syntactic correctness, semantic CIDR validation is handled by the controller logic.

The controller logic

The controller runs as a microservice inside the cluster. Its core logic is idempotent, it can execute repeatedly, but will only update the Ingress if the current configuration differs from the desired state.

It uses JSON Patch operations to modify only the relevant security annotations, ensuring that application-specific ingress configuration remains untouched.

Why this architecture?

- Anti-drift: If someone manually changes the Ingress annotation via kubectl, the Controller sees the "drift" and automatically overwrites it to match the authorized policy.

- Input validation: The CRD schema rejects invalid IP formats (like 999.9.9.9) at the API level, preventing broken configurations from ever reaching NGINX.

- Auditability: Every change is logged in the Kubernetes Audit Trail, providing a transparent history of who authorized which network path.

Appendix:

- CRD (Custom Resource Definition): A way to extend the Kubernetes API with your own object types.

- Controller/Reconciler: The software "brain" that ensures the real world matches your YAML definition.

- CIDR: The standard notation for describing a block of IP addresses.

- Idempotency: A property where an operation can be applied multiple times without changing the result beyond the initial application (e.g., adding an IP that is already there doesn't cause an error).